Normal distribution PDF derivation

GitHub repository

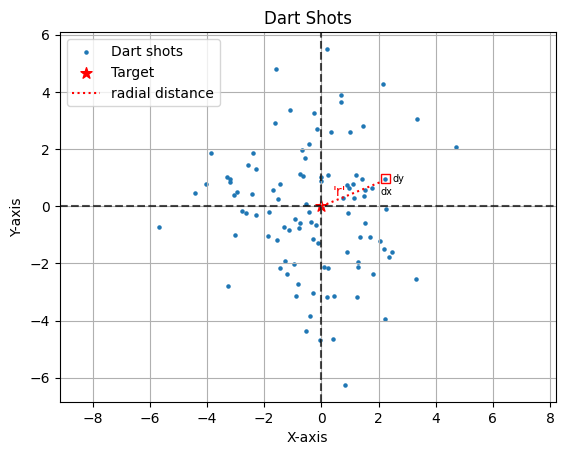

In this Jupyter notebook, we will derive the pdf of normal distribution using Dart thought experiment.

PDF:

f ( x ) = 1 σ 2 π ⋅ e − 1 2 ( x − μ σ ) 2 f(x)=\frac{1}{\sigma \sqrt{2\pi}}\cdot e^{-\frac{1}{2} (\frac{x-\mu}{\sigma})^2} f ( x ) = σ 2 π 1 ⋅ e − 2 1 ( σ x − μ ) 2 Dart thought experiment

The dart thought experiment is a conceptual way to understand the derivation of the probability density function (PDF) of a normal distribution, often known as a Gaussian distribution. Here's an explanation of the thought experiment:

Dartboard Analogy : Imagine a dartboard where darts are thrown randomly. Assume that the darts are more likely to hit near the center of the board and less likely to hit as you move away from the center. This setup is analogous to a random variable with a normal distribution, where values near the mean are more likely than values far from the mean.

Two-Dimensional Distribution : Consider the dartboard as a two-dimensional space with the center representing the mean of the distribution. The x and y coordinates of where the dart hits can be thought of as two independent normally distributed random variables, each with its own mean and standard deviation.

Radial Symmetry and Distance : The probability of a dart landing at a particular point should only depend on the distance of that point from the center, not the direction. This radial symmetry suggests that the probability density at any point depends only on the distance from the mean, not the specific x and y values.

Simulation of darts shots

import numpy as np from scipy . integrate import quad import matplotlib . pyplot as plt import matplotlib . patches as patches np . random . seed ( 2609 ) shots = np . random . normal ( 0 , 2 , ( 100 , 2 ) ) plt . axhline ( y = 0 , color = 'black' , linestyle = '--' , alpha = 0.7 ) plt . axvline ( x = 0 , color = 'black' , linestyle = '--' , alpha = 0.7 ) plt . scatter ( shots [ : , 0 ] , shots [ : , 1 ] , s = 5 , label = 'Dart shots' ) plt . scatter ( 0 , 0 , marker = '*' , color = 'r' , s = 70 , label = 'Target' ) plt . plot ( [ 0 , 2.15 ] , [ 0 , 0.88 ] , linestyle = ':' , color = 'red' , label = 'radial distance' ) square = patches . Rectangle ( ( 2.10 , 0.83 ) , 0.3 , 0.3 , fill = False , color = 'red' ) plt . gca ( ) . add_patch ( square ) plt . annotate ( 'dx' , xy = ( 2.07 , 0.4 ) , xytext = ( 2.07 , 0.4 ) , fontsize = 7 ) plt . annotate ( 'dy' , xy = ( 2.5 , 0.85 ) , xytext = ( 2.5 , 0.85 ) , fontsize = 7 ) plt . annotate ( "'r'" , xy = ( 2.5 , 0.85 ) , xytext = ( 0.4 , 0.35 ) , color = 'r' , fontsize = 10 ) plt . xlabel ( 'X-axis' ) plt . ylabel ( 'Y-axis' ) plt . title ( 'Dart Shots' ) plt . axis ( 'equal' ) plt . legend ( loc = 'upper left' ) plt . grid ( ) plt . show ( )

Derivation of probability density function

Main: Part 1

Consider a function ϕ \phi ϕ x x x y y y d A = d x . d y dA=dx.dy d A = d x . d y

ϕ : ( x , y ) → [ 0 , 1 ] ≡ ϕ : r → [ 0 , 1 ] \phi: (x,y) \rightarrow [0,1] \equiv \phi: r \rightarrow [0,1] ϕ : ( x , y ) → [ 0 , 1 ] ≡ ϕ : r → [ 0 , 1 ] r r r ( x , y ) (x,y) ( x , y )

∫ S ϕ ( r ) ⋅ d A = ∫ − ∞ ∞ ∫ − ∞ ∞ ϕ ( r ) ⋅ d x ⋅ d y = 1 \int_{S}\phi(r)\cdot dA = \int_{-\infty}^{\infty} \int_{-\infty}^{\infty} \phi(r)\cdot dx \cdot dy = 1 ∫ S ϕ ( r ) ⋅ d A = ∫ − ∞ ∞ ∫ − ∞ ∞ ϕ ( r ) ⋅ d x ⋅ d y = 1 Since x x x y y y

ϕ ( r ) = f X ( x ) ⋅ f Y ( y ) , \phi(r)=f_X(x)\cdot f_Y(y), ϕ ( r ) = f X ( x ) ⋅ f Y ( y ) , where f X ( x ) f_X(x) f X ( x ) f Y ( y ) f_Y(y) f Y ( y ) X X X Y Y Y

r r r x 2 + y 2 \sqrt{x^2 + y^2} x 2 + y 2

ϕ ( r ) = ϕ ( x 2 + y 2 ) = f X ( x ) ⋅ f Y ( y ) . \phi(r)=\phi(\sqrt{x^2 + y^2})=f_X(x)\cdot f_Y(y). ϕ ( r ) = ϕ ( x 2 + y 2 ) = f X ( x ) ⋅ f Y ( y ) . Let y = 0 y=0 y = 0 f Y ( 0 ) = λ f_Y(0)=\lambda f Y ( 0 ) = λ

ϕ ( x 2 + 0 2 ) = ϕ ( x ) = f X ( x ) ⋅ f Y ( 0 ) = f X ( x ) ⋅ λ . ⟹ ϕ ( x ) = λ ⋅ f X ( x ) ⟹ ϕ ( x 2 + y 2 ) = λ ⋅ f X ( x 2 + y 2 ) ⟹ λ ⋅ f X ( x 2 + y 2 ) = f X ( x ) ⋅ f Y ( y ) \begin{align*}

&\phi(\sqrt{x^2 + 0^2})= \phi(x) =f_X(x)\cdot f_Y(0) = f_X(x)\cdot \lambda .\\

&\implies \phi(x) = \lambda \cdot f_X(x)\\

&\implies \phi(\sqrt{x^2 + y^2}) = \lambda \cdot f_X(\sqrt{x^2 + y^2})\\

&\implies \lambda \cdot f_X(\sqrt{x^2 + y^2}) = f_X(x)\cdot f_Y(y)

\end{align*} ϕ ( x 2 + 0 2 ) = ϕ ( x ) = f X ( x ) ⋅ f Y ( 0 ) = f X ( x ) ⋅ λ . ⟹ ϕ ( x ) = λ ⋅ f X ( x ) ⟹ ϕ ( x 2 + y 2 ) = λ ⋅ f X ( x 2 + y 2 ) ⟹ λ ⋅ f X ( x 2 + y 2 ) = f X ( x ) ⋅ f Y ( y ) Divide the last equation by λ 2 \lambda^2 λ 2

f X ( x 2 + y 2 ) λ = f X ( x ) λ ⋅ f Y ( y ) λ . \begin{align*}

&\frac{f_X(\sqrt{x^2 + y^2})}{\lambda} = \frac{f_X(x)}{\lambda}\cdot \frac{f_Y(y)}{\lambda}.

\end{align*} λ f X ( x 2 + y 2 ) = λ f X ( x ) ⋅ λ f Y ( y ) . Assume that both the random variables, X X X Y Y Y ⟹ f X ( . ) = f Y ( . ) = f ( . ) \implies f_X(.)=f_Y(.)=f(.) ⟹ f X ( . ) = f Y ( . ) = f ( . )

f ( x 2 + y 2 ) λ = f ( x ) λ ⋅ f ( y ) λ . \begin{align*}

&\frac{f(\sqrt{x^2 + y^2})}{\lambda} = \frac{f(x)}{\lambda}\cdot \frac{f(y)}{\lambda}.

\end{align*} λ f ( x 2 + y 2 ) = λ f ( x ) ⋅ λ f ( y ) . Let g ( x ) = f ( x ) λ g(x)=\frac{f(x)}{\lambda} g ( x ) = λ f ( x )

⟹ g ( x 2 + y 2 ) = g ( x ) ⋅ g ( y ) . \begin{align*}

&\implies g(\sqrt{x^2 + y^2}) = g(x)\cdot g(y).

\end{align*} ⟹ g ( x 2 + y 2 ) = g ( x ) ⋅ g ( y ) .

Aside: Algebra

Consider the following observations:

n x ⋅ n y = n ( x + y ) n^x \cdot n^y = n^{(x+y)} n x ⋅ n y = n ( x + y ) n x 2 ⋅ n y 2 = n ( x 2 + y 2 ) n^{x^2} \cdot n^{y^2} = n^{(x^2+y^2)} n x 2 ⋅ n y 2 = n ( x 2 + y 2 ) Let g ( x ) = e k x 2 g(x)=e^{kx^2} g ( x ) = e k x 2

⟹ g ( x ) ⋅ g ( y ) = e k x 2 ⋅ e k y 2 = e k ( x 2 + y 2 ) = g ( x 2 + y 2 ) \implies g(x)\cdot g(y)=e^{kx^2}\cdot e^{ky^2}=e^{k(x^2+y^2)}=g(\sqrt{x^2+y^2}) ⟹ g ( x ) ⋅ g ( y ) = e k x 2 ⋅ e k y 2 = e k ( x 2 + y 2 ) = g ( x 2 + y 2 )

Main: Part 2

Now we have,

g ( x ) = e k x 2 and also g ( x ) = f ( x ) λ ⟹ f ( x ) = λ ⋅ g ( x ) = λ ⋅ e k x 2 \begin{align*}

&g(x)=e^{kx^2} \text{ and also } g(x) = \frac{f(x)}{\lambda}\\

&\implies f(x) = \lambda \cdot g(x) = \lambda \cdot e^{kx^2}

\end{align*} g ( x ) = e k x 2 and also g ( x ) = λ f ( x ) ⟹ f ( x ) = λ ⋅ g ( x ) = λ ⋅ e k x 2 Note: k k k f ( x ) f(x) f ( x ) x x x k k k k = − m 2 , ∀ m ∈ R k=-m^2, \forall m \in \mathbb{R} k = − m 2 , ∀ m ∈ R

⟹ f ( x ) = λ e − m 2 x 2 . \implies f(x)=\lambda e^{-m^2x^2}. ⟹ f ( x ) = λ e − m 2 x 2 . Since f ( x ) f(x) f ( x )

∫ − ∞ ∞ f ( x ) ⋅ d x = ∫ − ∞ ∞ λ e − m 2 x 2 ⋅ d x = 1 \int_{-\infty}^{\infty}f(x) \cdot dx = \int_{-\infty}^{\infty}\lambda e^{-m^2x^2} \cdot dx=1 ∫ − ∞ ∞ f ( x ) ⋅ d x = ∫ − ∞ ∞ λ e − m 2 x 2 ⋅ d x = 1 Let u = m x u=mx u = m x ⟹ d u = m d x \implies du=mdx ⟹ d u = m d x

∫ − ∞ ∞ λ e − m 2 x 2 ⋅ d x = λ m ∫ − ∞ ∞ e − u 2 ⋅ d u = 1 \int_{-\infty}^{\infty}\lambda e^{-m^2x^2} \cdot dx = \frac{\lambda}{m}\int_{-\infty}^{\infty} e^{-u^2} \cdot du =1 ∫ − ∞ ∞ λ e − m 2 x 2 ⋅ d x = m λ ∫ − ∞ ∞ e − u 2 ⋅ d u = 1

Aside: Tricky integral

Integrate ∫ − ∞ ∞ e − u 2 ⋅ d u \int_{-\infty}^{\infty} e^{-u^2} \cdot du ∫ − ∞ ∞ e − u 2 ⋅ d u

We will use two ways to calculate above integral, analytical and numerical.

Analytical

Consider

I = ∫ − ∞ ∞ e − u 2 ⋅ d u , I=\int_{-\infty}^{\infty} e^{-u^2} \cdot du, I = ∫ − ∞ ∞ e − u 2 ⋅ d u , then,

I 2 = ( ∫ − ∞ ∞ e − x 2 ⋅ d x ) ⋅ ( ∫ − ∞ ∞ e − y 2 ⋅ > d y ) I^2=\left(\int_{-\infty}^{\infty} e^{-x^2} \cdot dx\right)\cdot\left(\int_{-\infty}^{\infty} e^{-y^2} \cdot >dy\right) I 2 = ( ∫ − ∞ ∞ e − x 2 ⋅ d x ) ⋅ ( ∫ − ∞ ∞ e − y 2 ⋅ > d y ) In terms of x x x y y y

I 2 = ∫ − ∞ ∞ ∫ − ∞ ∞ e − ( x 2 + y 2 ) ⋅ d x ⋅ d y I^2=\int_{-\infty}^{\infty} \int_{-\infty}^{\infty} e^{-(x^2+y^2)} \cdot dx \cdot dy I 2 = ∫ − ∞ ∞ ∫ − ∞ ∞ e − ( x 2 + y 2 ) ⋅ d x ⋅ d y Switch from Cartesian coordinates ( x , y ) (x,y) ( x , y ) ( r , θ ) (r,\theta) ( r , θ ) x 2 + y 2 = r 2 x^2+y^2=r^2 x 2 + y 2 = r 2 d x d y = r d r d θ dx dy=r dr d\theta d x d y = r d r d θ [How?]

The limits for r r r 0 0 0 ∞ \infty ∞ θ \theta θ 0 0 0 2 π 2\pi 2 π

⟹ I 2 = ∫ 0 2 π ∫ 0 ∞ e − r 2 ⋅ r ⋅ d r ⋅ d θ \implies I^2=\int_{0}^{2\pi} \int_{0}^{\infty} e^{-r^2} \cdot r \cdot dr \cdot d\theta ⟹ I 2 = ∫ 0 2 π ∫ 0 ∞ e − r 2 ⋅ r ⋅ d r ⋅ d θ

Step 1: Calculate ∫ 0 ∞ e − r 2 ⋅ r ⋅ d r \int_{0}^{\infty} e^{-r^2} \cdot r \cdot dr ∫ 0 ∞ e − r 2 ⋅ r ⋅ d r r 2 = u r^2=u r 2 = u d u = 2 r d r du=2rdr d u = 2 r d r

1 2 ∫ 0 ∞ e − u ⋅ d u = 1 2 − e − u ∣ 0 ∞ = 1 2 [ 0 − ( − 1 ) ] = 1 2 \frac{1}{2}\int_{0}^{\infty} e^{-u} \cdot du =\frac{1}{2}-e^{-u}\Bigr|_{0}^{\infty}=\frac{1}{2}[0-(-1)]=\frac{1}{2} 2 1 ∫ 0 ∞ e − u ⋅ d u = 2 1 − e − u 0 ∞ = 2 1 [ 0 − ( − 1 )] = 2 1

Step 2: Calculate I 2 = ∫ 0 2 π 1 2 ⋅ d θ : I^2=\int_{0}^{2\pi} \frac{1}{2} \cdot d\theta: I 2 = ∫ 0 2 π 2 1 ⋅ d θ :

I 2 = 1 2 θ ∣ 0 2 π = π I^2 = \frac{1}{2}\theta\Bigr|_{0}^{2\pi}=\pi I 2 = 2 1 θ 0 2 π = π ⟹ I = π \implies I=\sqrt{\pi} ⟹ I = π Numerical

inf = float ( 'inf' ) def f ( u ) : return ( np . e ) ** ( - ( u ** 2 ) ) quad ( f , - inf , inf ) [ 0 ] 1.772453850905516

The above value is equal to π \sqrt{\pi} π

1.7724538509055159

Main: Part 3

We had

∫ − ∞ ∞ f ( x ) ⋅ d x = ∫ − ∞ ∞ λ e − m 2 x 2 ⋅ d x = λ m ∫ − ∞ ∞ e − u 2 ⋅ d u = 1 = λ π m = 1 ⟹ m 2 = λ 2 ⋅ π ⟹ k = − λ 2 ⋅ π \begin{align*}

\int_{-\infty}^{\infty}f(x) \cdot dx&=\int_{-\infty}^{\infty}\lambda e^{-m^2x^2} \cdot dx = \frac{\lambda}{m}\int_{-\infty}^{\infty} e^{-u^2} \cdot du =1 \\

&=\frac{\lambda \sqrt{\pi}}{m} = 1\\

&\implies m^2=\lambda^2 \cdot \pi\\

&\implies k = -\lambda^2 \cdot \pi

\end{align*} ∫ − ∞ ∞ f ( x ) ⋅ d x = ∫ − ∞ ∞ λ e − m 2 x 2 ⋅ d x = m λ ∫ − ∞ ∞ e − u 2 ⋅ d u = 1 = m λ π = 1 ⟹ m 2 = λ 2 ⋅ π ⟹ k = − λ 2 ⋅ π Hence,

f ( x ) = λ e − λ 2 π x 2 . f(x)=\lambda e^{-\lambda^2 \pi x^2}. f ( x ) = λ e − λ 2 π x 2 . Now let's talk about variance of X X X

V a r ( X ) = σ 2 = E [ ( X − μ X ) 2 ] = ∫ − ∞ ∞ ( x − μ X ) 2 f ( x ) = ∫ − ∞ ∞ ( x − μ X ) 2 λ e − λ 2 π x 2 ⋅ d x Var(X)=\sigma^2=\mathbb{E}[(X-\mu_X)^2]=\int_{-\infty}^{\infty}(x-\mu_X)^2f(x)=\int_{-\infty}^{\infty}(x-\mu_X)^2 \lambda e^{-\lambda^2 \pi x^2} \cdot dx Va r ( X ) = σ 2 = E [( X − μ X ) 2 ] = ∫ − ∞ ∞ ( x − μ X ) 2 f ( x ) = ∫ − ∞ ∞ ( x − μ X ) 2 λ e − λ 2 π x 2 ⋅ d x We assumed μ X = 0 \mu_X=0 μ X = 0

σ 2 = ∫ − ∞ ∞ x 2 λ e − λ 2 π x 2 ⋅ d x = 1 2 π λ 2 \sigma^2=\int_{-\infty}^{\infty}x^2 \lambda e^{-\lambda^2 \pi x^2} \cdot dx=\dfrac{1}{2\pi\lambda^2} σ 2 = ∫ − ∞ ∞ x 2 λ e − λ 2 π x 2 ⋅ d x = 2 π λ 2 1 This integral is not complicated by has too many steps so I recommend you to use this solution.

From the above expression, we get:

λ = 1 σ 2 π \lambda=\frac{1}{\sigma\sqrt{2\pi}} λ = σ 2 π 1 ⟹ f ( x ) = 1 σ 2 π ⋅ e − 1 2 ( x σ ) 2 \implies f(x)=\frac{1}{\sigma\sqrt{2\pi}}\cdot e^{-\frac{1}{2}\left(\frac{x}{ \sigma}\right)^2} ⟹ f ( x ) = σ 2 π 1 ⋅ e − 2 1 ( σ x ) 2 If μ X \mu_X μ X x ′ s x's x ′ s x − μ x-\mu x − μ

f ( x ) = 1 σ 2 π ⋅ e − 1 2 ( x − μ σ ) 2 ■ f(x)=\frac{1}{\sigma\sqrt{2\pi}}\cdot e^{-\frac{1}{2}\left(\frac{x-\mu}{ \sigma}\right)^2} \qquad \qquad \blacksquare f ( x ) = σ 2 π 1 ⋅ e − 2 1 ( σ x − μ ) 2 ■ References

https://youtu.be/N-bI-Dsm-rw?si=HtiUuOghxs_X1SLM