Shapiro Wilk Test

In this Jupyter notebook, we're going to create a distribution chart for the W statistic, using a sample size of n, by employing Monte Carlo simulation methods. Subsequently, we will evaluate and contrast the outcomes of our self-generated W statistic against the results from the Shapiro test, which is a built-in feature of the Scipy library.

Introduction

The Shapiro-Wilk test is a widely used statistical procedure for testing the normality of a data set. Developed by Samuel Shapiro and Martin Wilk in 1965, this test is particularly effective for small sample sizes, typically considered to be less than 50. The test calculates a statistic, often denoted as W, which evaluates the degree to which a set of data conforms to a normal distribution.

Here's a brief overview of the test:

-

Purpose: The primary objective of the Shapiro-Wilk test is to determine whether a given sample comes from a normally distributed population. This is crucial in statistics, as many parametric tests assume normality of the data.

-

Method: The test compares the order statistics (sorted data points) of the sample to the expected values of these order statistics if the data were normally distributed. The W statistic is a measure of how closely the data points match the normal distribution.

-

W Statistic: The W value ranges from 0 to 1, where values close to 1 indicate that the data are likely normally distributed. A lower W value suggests deviations from normality.

-

Interpretation: The result of the test includes the W statistic and a p-value. The null hypothesis of the test is that the data are normally distributed. If the p-value is less than a chosen significance level (commonly 0.05), the null hypothesis is rejected, suggesting that the data are not normally distributed.

-

Applications: The Shapiro-Wilk test is used in various fields for preliminary data analysis, especially where normality is an assumption for further statistical tests, such as ANOVA, t-tests, and regression analysis.

Overall, the Shapiro-Wilk test is a fundamental tool in statistics for assessing the normality of data, providing an essential step in many analytical procedures.

W statistic

Theory

The W statistic is give by the following expression:

Where

is the order statistic. For instance, we draw a sample of size 10 from a distribution, we arrange the sample in ascending order. So would be the smallest value of the sample and would be the highest.

is covariance matrix where

, where

is order statistic. And is

Note: of order statistics varies with the size of the sample. This variation is due to the fact that order statistics are influenced by the ranking of data points within a sample. With changes in sample size, the distribution of these rankings shifts. As we observe an increase in sample size, the scope of potential values for any specific order statistic broadens. For example, the largest value in a bigger sample is typically higher than that in a smaller one, owing to the greater number of data points available to determine the maximum.

Calculating expectation of order statistics

In a sample of size the expected value of the th largest order statistic is given by

where

and

by Royston (1982).

Another way to calculate expected value of order statistics is by Monte Carlo simulation.

We will code both the ways, but we will use the expected values calculated by Monte Carlo simulation.

# importing libraries

from scipy.integrate import quad

from scipy.special import binom

from scipy.stats import norm

import numpy as np

import matplotlib.pyplot as plt

from scipy.stats import shapiro

# function for calculating expectations of order statistics

inf, phi, Phi = float('inf'), norm.pdf, norm.cdf

def E(r, n):

def f(x):

F = Phi(x)

return x*(1-F)**(r-1)*F**(n-r)*phi(x)

return r*binom(n, r)*quad(f, -inf, inf)[0]

Creating vector m

# defining the sample size,

n=10

# creating vector m

m=np.array([E(i,n) for i in range(1,n+1)])

m=m[::-1] #reversing the order since Royston (1982) give j-th largest order statistic

m=m.reshape((n,1))

m

array([[-1.53875273], [-1.00135704], [-0.65605911], [-0.3757647 ], [-0.12266775], [ 0.12266775], [ 0.3757647 ], [ 0.65605911], [ 1.00135704], [ 1.53875273]])

Creating covariance matrix V

To create the covariance matrix , we run a Monte Carlo simulation.

np.random.seed(269)

matrix=np.random.normal(0,1,(1000000,n))

matrix=np.sort(matrix,axis=1)

cov_matrix = np.cov(matrix, rowvar=False)

cov_matrix

array([[0.34480823, 0.17113808, 0.11640678, 0.08818278, 0.07066647, 0.05855113, 0.04903132, 0.04097532, 0.03424416, 0.02729233], [0.17113808, 0.21423015, 0.14650601, 0.11143691, 0.08958855, 0.07415785, 0.06220751, 0.05215463, 0.04342614, 0.03446663], [0.11640678, 0.14650601, 0.17499184, 0.13362146, 0.1075927 , 0.08917342, 0.07486115, 0.06289104, 0.05235331, 0.04142845], [0.08818278, 0.11143691, 0.13362146, 0.15778144, 0.12737174, 0.10577756, 0.08898409, 0.07488866, 0.06251412, 0.04923958], [0.07066647, 0.08958855, 0.1075927 , 0.12737174, 0.15084324, 0.12552122, 0.10573817, 0.08902319, 0.07434763, 0.05857413], [0.05855113, 0.07415785, 0.08917342, 0.10577756, 0.12552122, 0.15084589, 0.12736883, 0.10756093, 0.08972441, 0.07074192], [0.04903132, 0.06220751, 0.07486115, 0.08898409, 0.10573817, 0.12736883, 0.15776356, 0.13360747, 0.11161433, 0.0881613 ], [0.04097532, 0.05215463, 0.06289104, 0.07488866, 0.08902319, 0.10756093, 0.13360747, 0.17481381, 0.14665464, 0.11628571], [0.03424416, 0.04342614, 0.05235331, 0.06251412, 0.07434763, 0.08972441, 0.11161433, 0.14665464, 0.21464918, 0.17107261], [0.02729233, 0.03446663, 0.04142845, 0.04923958, 0.05857413, 0.07074192, 0.0881613 , 0.11628571, 0.17107261, 0.34390761]])

m=(np.mean(matrix,axis=0)).reshape(10,1)

m

array([[-1.53889117], [-1.00124066], [-0.65582729], [-0.37548451], [-0.12268297], [ 0.12199091], [ 0.3747911 ], [ 0.65507792], [ 1.00003415], [ 1.53729462]])

Creating the length C

C = (m.T @ (np.linalg.inv(cov_matrix)) @ (np.linalg.inv(cov_matrix)) @ m)**(0.5) # '@' multplies two matrices

C

array([[6.19427319]])

Creating vector a

a=(m.T @ (np.linalg.inv(cov_matrix)))/(C)

a

array([[-0.57207524, -0.3328623 , -0.2068158 , -0.12950197, -0.04215577, 0.04442722, 0.1267467 , 0.20638143, 0.32896421, 0.57598846]])

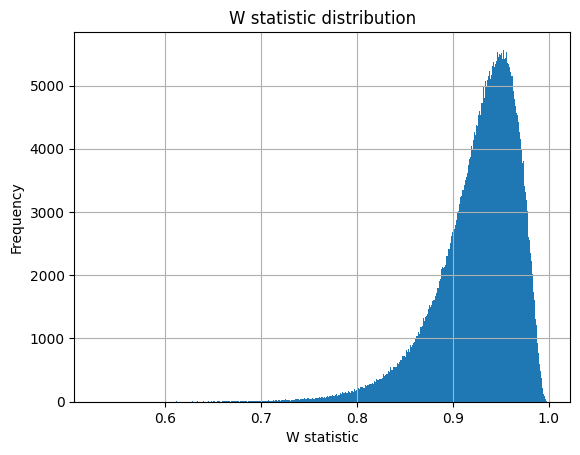

Monte Carlo simulation to plot W statistic distribution

# generating many samples

iteration=1000000

np.random.seed(2609)

x_mat=np.random.normal(0,1,(iteration,n))

x_mat=np.sort(x_mat,axis=1)

x_mat

array([[-2.02258982, -1.19155678, -0.81895459, ..., 1.30640262, 1.45529678, 2.35676065], [-2.3236977 , -1.14898889, -0.55883736, ..., 0.19719931, 0.65898349, 1.63017364], [-1.38408064, -1.08530663, -0.80016064, ..., 0.56901273, 1.2402628 , 2.40098544], ..., [-1.44008624, -1.24841506, -0.65395294, ..., -0.04813313, 0.68951906, 1.21878072], [-0.81335319, -0.78517371, -0.69083909, ..., 0.77903271, 1.52354765, 1.81966713], [-1.38512201, -1.02405077, -0.93704861, ..., 0.60640053, 0.66852956, 1.35122507]])

# calculation the variance of each sample

x_var=np.var(x_mat, axis=1,ddof=1)

x_var

array([1.85990199, 1.09925087, 1.35017523, ..., 0.64084908, 0.93247314, 0.75201877])

denomenator=(x_var)*(n-1) #this is our denomenator of W statistic

denomenator=denomenator.reshape(1,iteration)

denomenator

array([[16.73911793, 9.89325784, 12.15157709, ..., 5.76764168, 8.39225825, 6.76816897]])

numerator = (a @ x_mat.T)**2 #this is our numerator of W statistic

numerator=numerator.reshape(1,iteration)

numerator

array([[16.3838163 , 9.54557876, 11.27184021, ..., 5.40733275, 7.51559228, 6.57310058]])

W=numerator/denomenator

W=W.flatten() #without flatten, plt takes a lot time to plot hist

W

array([0.97877417, 0.96485697, 0.92760307, ..., 0.93752924, 0.89553873, 0.97117856])

plt.hist(W,bins=1000)

plt.grid()

plt.title("W statistic distribution")

plt.xlabel("W statistic")

plt.ylabel("Frequency")

plt.show()

Comparing our Distribution with Scipy-Stats' Shapiro Test

Here we will compare the accuracies

iter=10000

#y=np.random.normal(200,9.5,(iter,10))

y=np.random.uniform(200,1000,(10000,n))

y=np.sort(y,axis=1)

# Standardize the matrix

mean = (np.mean(y,axis=1)).reshape(iter,1)

std_dev = (np.std(y,axis=1,ddof=1)).reshape(iter,1)

y_standardised = (y - mean) / std_dev

shapiro_stat, shapiro_p=np.array([]),np.array([])

for i, row in enumerate(y_standardised):

shapiro_stat, shapiro_p = np.append(shapiro_stat,shapiro(row)[0]),np.append(shapiro_p,shapiro(row)[1])

W_sorted=np.sort(W)

our_stat=np.array([(((a @ row.T)**2)/(np.var(row,ddof=1)*(n-1)))[0] for row in y_standardised])

our_p=np.array([((W_sorted<i).sum())/len(W) for i in our_stat])

print(f"Accuracy of in-built test:{round((((shapiro_p>0.05).sum())/iter)*100,2)} %")

print(f"Accuracy of our test:{round((((our_p>0.05).sum())/iter)*100,2)} %\n")

Accuracy of in-built test:92.24 %

Accuracy of our test:92.07 %

References

Harter, H. L. (1961). Expected Values of Normal Order Statistics. Biometrika, 48(1/2), 151–165.

https://math.mit.edu/~rmd/465/shapiro.pdf

Royston, J. P. (1982). Algorithm AS 177: Expected normal order statistics (exact and approximate). Journal of the royal statistical society. Series C (Applied statistics), 31(2), 161-165.

Shapiro, S. S., & Wilk, M. B. (1965). An Analysis of Variance Test for Normality (Complete Samples). Biometrika, 52(3/4), 591–611.